Right here’s a round-up of a few of NVIDIA’s bulletins as of late on the opening of the GTC convention.

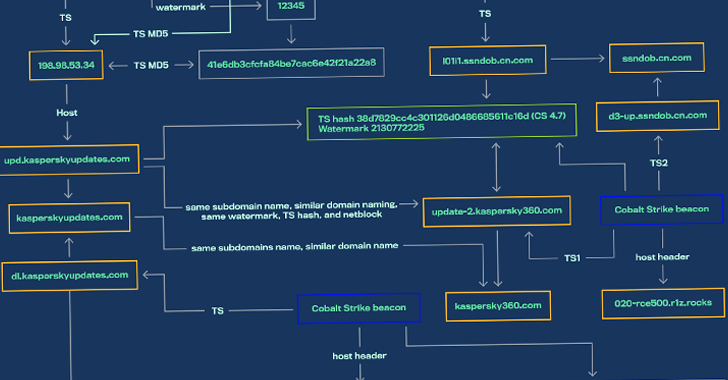

NVIDIA Lithography Device Followed by means of ASML, TSMC and Synopsys

NVIDIA cuLitho

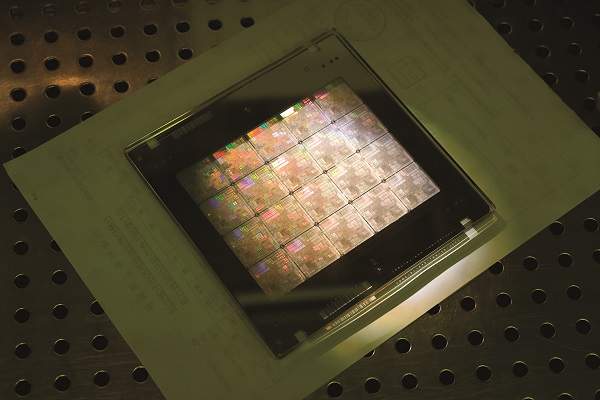

NVIDIA as of late introduced what it stated is a leap forward that brings speeded up computing to the sphere of computational lithography that may set the root for 2nm chips “simply as present manufacturing processes are nearing the bounds of what physics makes conceivable,” NVIDIA stated.

Working on GPUs, cuLitho delivers a functionality spice up of as much as 40x past present lithography — the method of constructing patterns on a silicon wafer — accelerating the huge computational workloads that lately eat tens of billions of CPU hours annually. NVIDIA stated it allows 500 NVIDIA DGX H100 techniques to succeed in the paintings of 40,000 CPU techniques, operating all portions of the computational lithography procedure in parallel, serving to cut back energy wishes and attainable environmental have an effect on.

The NVIDIA cuLitho device library for computational lithography is being built-in by means of chip foundry TSMC and digital design automation corporate Synopsys into their device, production processes and techniques for the latest-generation NVIDIA Hopper structure GPUs. Apparatus maker ASML is operating with NVIDIA on GPUs and cuLitho, and is making plans to combine enhance for GPUs into its computational lithography device merchandise.

The improvement will allow chips with tinier transistors and wires than is now achievable, whilst accelerating time to marketplace and boosting power potency of information facilities to pressure the producing procedure, NVIDIA stated.

“The chip business is the root of just about each different business on the earth,” stated Jensen Huang, founder and CEO of NVIDIA. “With lithography on the limits of physics, NVIDIA’s advent of cuLitho and collaboration with our companions TSMC, ASML and Synopsys lets in fabs to extend throughput, cut back their carbon footprint and set the root for 2nm and past.”

NVIDIA stated fabs the usage of cuLitho may just lend a hand produce every day 3-5x extra photomasks — the templates for a chip’s design — the usage of 9x much less energy than present configurations. A photomask that required two weeks can now be processed in a single day.

Long term, cuLitho will allow higher design laws, upper density, upper yields and AI-powered lithography, the corporate stated.

The whole announcement will also be discovered right here.

DGX Cloud

![]() NVIDIA introduced NVIDIA DGX Cloud, an AI supercomputing provider designed to provide enterprises get entry to to the infrastructure and device had to practice complicated fashions for generative AI and different packages.

NVIDIA introduced NVIDIA DGX Cloud, an AI supercomputing provider designed to provide enterprises get entry to to the infrastructure and device had to practice complicated fashions for generative AI and different packages.

The providing supplies devoted clusters of DGX AI supercomputing paired with NVIDIA AI device. The provider is accessed the usage of a internet browser and is designed to keep away from the complexity of deploying an on-premises infrastructure. Enterprises hire DGX Cloud clusters on a per thirty days foundation, enabling speedy deployment scaling, NVIDIA stated.

“We’re on the iPhone second of AI. Startups are racing to construct disruptive merchandise and industry fashions, and incumbents want to reply,” stated Huang. “DGX Cloud provides shoppers speedy get entry to to NVIDIA AI supercomputing in global-scale clouds.”

NVIDIA stated it is partnering with cloud provider suppliers to host DGX Cloud infrastructures, beginning with Oracle Cloud Infrastructure. The OCI Supercluster supplies a RDMA community, bare-metal compute and high-performance native and block garage that may scale to over 32,000 GPUs.

Microsoft Azure is predicted to start internet hosting DGX Cloud subsequent quarter, adopted by means of Google Cloud and others.

Additional info will also be discovered right here.

NVIDIA H100s on Public Cloud Platforms

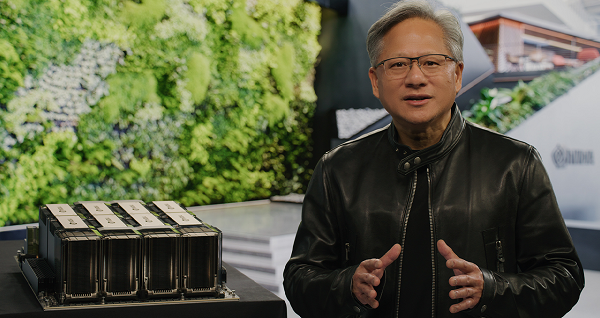

Jensen Huang with DGX H100 at GTC 2023

NVIDIA and companions as of late introduced the supply of latest services that includes the NVIDIA H100 Tensor Core GPU for generative AI coaching and inference.

Oracle Cloud Infrastructure (OCI) introduced the restricted availability of latest OCI Compute bare-metal GPU circumstances that includes H100 GPUs. Moreover, Amazon Internet Services and products introduced its imminent EC2 UltraClusters of Amazon EC2 P5 circumstances which is able to scale in measurement as much as 20,000 interconnected H100 GPUs. This follows Microsoft Azure’s non-public preview announcement ultimate week for its H100 digital gadget, ND H100 v5.

Moreover, Meta has now deployed its H100-powered Grand Teton AI supercomputer internally for its AI manufacturing and analysis groups.

“Generative AI’s improbable attainable is inspiring nearly each business to reimagine its industry methods and the generation required to succeed in them,” stated Huang. “NVIDIA and our companions are transferring rapid to give you the international’s maximum robust AI computing platform to these development packages that may essentially turn out to be how we are living, paintings and play.”

The H100, in accordance with the NVIDIA Hopper GPU computing structure with its integrated Transformer Engine, is optimized for growing, coaching and deploying generative AI, vast language fashions (LLMs) and recommender techniques. This generation uses the H100’s FP8 precision and gives 9x sooner AI coaching and as much as 30x sooner AI inference on LLMs as opposed to the prior-generation A100, NVIDIA stated. The H100 started delivery within the fall in particular person and choose board devices from international producers.

A number of generative AI firms are adopting H100 GPUs, together with ChatGPT author OpenAI, Meta within the building of its Grand Teton AI supercomputer and Steadiness.ai, the generative AI corporate.

Additional info will also be discovered right here.

NVIDIA and AWS for Generative AI

Amazon Internet Services and products and NVIDIA will collaborate on a scalable AI infrastructure for coaching vast language fashions (LLMs) and growing generative AI packages.

Amazon Internet Services and products and NVIDIA will collaborate on a scalable AI infrastructure for coaching vast language fashions (LLMs) and growing generative AI packages.

The venture comes to Amazon EC2 P5 circumstances powered by means of NVIDIA H100 Tensor Core GPUs and AWS networking and scalability that the corporations say will ship as much as 20 exaFLOPS of compute functionality. They stated P5 circumstances would be the first GPU-based example to be mixed with AWS’s second-generation Elastic Cloth Adapter networking, which supplies 3,200 Gbps of low-latency, excessive bandwidth networking throughput, enabling shoppers to scale as much as 20,000 H100 GPUs in EC2 UltraClusters.

P5 circumstances function 8 NVIDIA H100 GPUs able to 16 petaFLOPs of mixed-precision functionality, 640 GB of high-bandwidth reminiscence, and three,200 Gbps networking connectivity (8x greater than the former era) in one EC2 example. P5 circumstances hurries up the time-to-train gadget studying (ML) fashions by means of as much as 6x, and the extra GPU reminiscence is helping shoppers practice greater, extra complicated fashions, the corporations stated, including that P5 circumstances are anticipated to decrease the fee to coach ML fashions by means of as much as 40 p.c over the former era, in line with NVIDIA.

BioNeMo Generative AI Services and products for Existence Sciences

NVIDIA introduced a collection of generative AI cloud amenities designed for customizing AI basis fashions to boost up introduction of latest proteins and therapeutics, in addition to analysis in genomics, chemistry, biology and molecular dynamics.

NVIDIA introduced a collection of generative AI cloud amenities designed for customizing AI basis fashions to boost up introduction of latest proteins and therapeutics, in addition to analysis in genomics, chemistry, biology and molecular dynamics.

A part of NVIDIA AI Foundations, the BioNeMo Cloud provider providing — for each AI type coaching and inference — hurries up the time-consuming and expensive levels of drug discovery, NVIDIA stated. It allows researchers to fine-tune generative AI packages on their proprietary knowledge, and to run AI type inference immediately in a internet browser or thru new cloud APIs that combine into current packages.

“The transformative energy of generative AI holds monumental promise for the lifestyles science and pharmaceutical industries,” stated Kimberly Powell, vp of healthcare at NVIDIA. “NVIDIA’s lengthy collaboration with pioneers within the box has resulted in the advance of BioNeMo Cloud Carrier, which is already serving as an AI drug discovery laboratory. It supplies pretrained fashions and lets in customization of fashions with proprietary knowledge that serve each level of the drug-discovery pipeline, serving to researchers determine the proper goal, design molecules and proteins, and are expecting their interactions within the frame to broaden the most efficient drug candidate.”

BioNeMo now has six open-source fashions, along with its in the past introduced MegaMolBART generative chemistry type, ESM1nv protein language type and OpenFold protein construction prediction type. The pretrained AI fashions are designed to lend a hand researchers construct AI pipelines for drug building.

Generative AI fashions can determine attainable drug molecules — in some circumstances designing compounds or protein-based therapeutics from scratch. Educated on large-scale datasets of small molecules, proteins, DNA and RNA sequences, those fashions can are expecting the 3-D construction of a protein and the way smartly a molecule will dock with a goal protein.

BioNeMo has been followed by means of drug-discovery firms together with Evozyne and Insilico Medication, at the side of biotechnology corporate Amgen.

“BioNeMo is dramatically accelerating our technique to biologics discovery,” stated Peter Grandsard, government director of Biologics Healing Discovery, Heart for Analysis Acceleration by means of Virtual Innovation at Amgen. “With it, we will be able to pretrain vast language fashions for molecular biology on Amgen’s proprietary knowledge, enabling us to discover and broaden healing proteins for the following era of medication that may lend a hand sufferers.”

Additional info will also be discovered right here.

Supply Through https://insidehpc.com/2023/03/nvidia-claims-iphone-moment-of-ai-at-gtc-announces-raft-of-ai-related-chips-systems-and-services/